Run Externally

Learn how to configure the dbt workflow externally to ingest dbt data from your data sources.

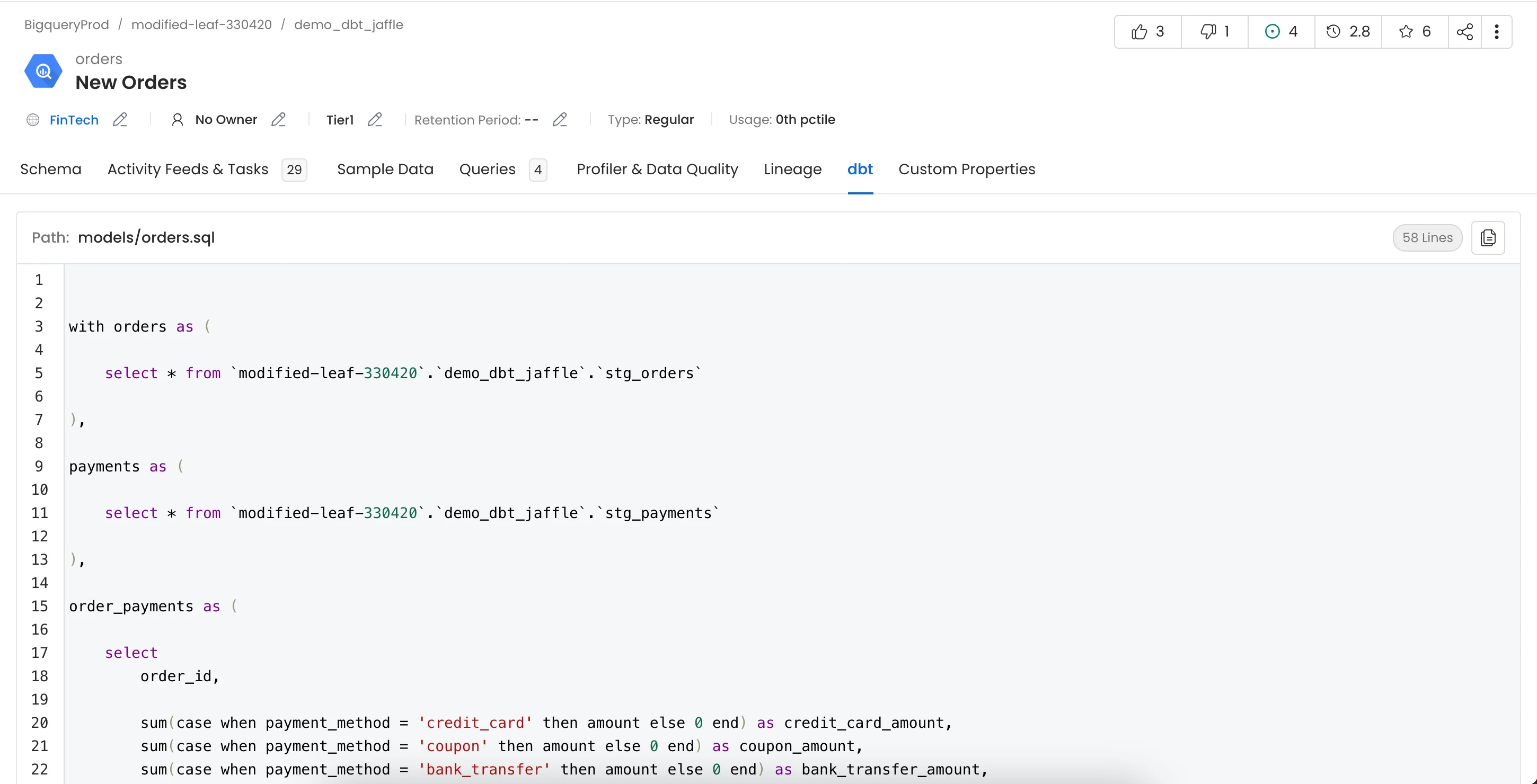

Once the metadata ingestion runs correctly and we are able to explore the service Entities, we can add the dbt information.

This will populate the dbt tab from the Table Entity Page.

dbt

We can create a workflow that will obtain the dbt information from the dbt files and feed it to OpenMetadata. The dbt Ingestion will be in charge of obtaining this data.

1. Define the YAML Config

Select the yaml config from one of the below sources:

The dbt files should be present on the source mentioned and should have the necessary permissions to be able to access the files. Enter the name of your database service from OpenMetadata in the serviceName key in the yaml

1. AWS S3 Buckets

In this configuration we will be fetching the dbt manifest.json, catalog.json and run_results.json files from an S3 bucket.

Source Config - Type

- dbtConfigType: s3

- awsAccessKeyId & awsSecretAccessKey: When you interact with AWS, you specify your AWS security credentials to verify who you are and whether you have permission to access the resources that you are requesting. AWS uses the security credentials to authenticate and authorize your requests (docs).

Access keys consist of two parts: An access key ID (for example, AKIAIOSFODNN7EXAMPLE), and a secret access key (for example, wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY).

You must use both the access key ID and secret access key together to authenticate your requests.

You can find further information on how to manage your access keys here.

awsSessionToken: If you are using temporary credentials to access your services, you will need to inform the AWS Access Key ID and AWS Secrets Access Key. Also, these will include an AWS Session Token.

awsRegion: Each AWS Region is a separate geographic area in which AWS clusters data centers (docs).

As AWS can have instances in multiple regions, we need to know the region the service you want reach belongs to.

Note that the AWS Region is the only required parameter when configuring a connection. When connecting to the services programmatically, there are different ways in which we can extract and use the rest of AWS configurations.

You can find further information about configuring your credentials here.

endPointURL: To connect programmatically to an AWS service, you use an endpoint. An endpoint is the URL of the entry point for an AWS web service. The AWS SDKs and the AWS Command Line Interface (AWS CLI) automatically use the default endpoint for each service in an AWS Region. But you can specify an alternate endpoint for your API requests.

Find more information on AWS service endpoints.

profileName: A named profile is a collection of settings and credentials that you can apply to a AWS CLI command. When you specify a profile to run a command, the settings and credentials are used to run that command. Multiple named profiles can be stored in the config and credentials files.

You can inform this field if you'd like to use a profile other than default.

Find here more information about Named profiles for the AWS CLI.

assumeRoleArn: Typically, you use AssumeRole within your account or for cross-account access. In this field you'll set the ARN (Amazon Resource Name) of the policy of the other account.

A user who wants to access a role in a different account must also have permissions that are delegated from the account administrator. The administrator must attach a policy that allows the user to call AssumeRole for the ARN of the role in the other account.

This is a required field if you'd like to AssumeRole.

Find more information on AssumeRole.

When using Assume Role authentication, ensure you provide the following details:

- AWS Region: Specify the AWS region for your deployment.

- Assume Role ARN: Provide the ARN of the role in your AWS account that OpenMetadata will assume.

assumeRoleSessionName: An identifier for the assumed role session. Use the role session name to uniquely identify a session when the same role is assumed by different principals or for different reasons.

By default, we'll use the name OpenMetadataSession.

Find more information about the Role Session Name.

assumeRoleSourceIdentity: The source identity specified by the principal that is calling the AssumeRole operation. You can use source identity information in AWS CloudTrail logs to determine who took actions with a role.

Find more information about Source Identity.

dbt Prefix Configuration

dbtPrefixConfig: Optional config to specify the bucket name and directory path where the dbt files are stored. If config is not provided ingestion will scan all the buckets for dbt files.

dbtBucketName: Name of the bucket where the dbt files are stored.

dbtObjectPrefix: Path of the folder where the dbt files are stored.

Follow the documentation here to configure multiple dbt projects

Source Config

dbtUpdateDescriptions: Configuration to update the description from dbt or not. If set to true descriptions from dbt will override the already present descriptions on the entity. For more details visit here

dbtUpdateOwners: Configuration to update the owner from dbt or not. If set to true owners from dbt will override the already present owners on the entity. For more details visit here

includeTags: true or false, to ingest tags from dbt. Default is true.

dbtClassificationName: Custom OpenMetadata Classification name for dbt tags.

databaseFilterPattern, schemaFilterPattern, tableFilterPattern: Add filters to filter out models from the dbt manifest. Note that the filter supports regex as include or exclude. You can find examples here

Sink Configuration

To send the metadata to OpenMetadata, it needs to be specified as type: metadata-rest.

Workflow Configuration

The main property here is the openMetadataServerConfig, where you can define the host and security provider of your OpenMetadata installation.

Logger Level

You can specify the loggerLevel depending on your needs. If you are trying to troubleshoot an ingestion, running with DEBUG will give you far more traces for identifying issues.

JWT Token

JWT tokens will allow your clients to authenticate against the OpenMetadata server. To enable JWT Tokens, you will get more details here.

You can refer to the JWT Troubleshooting section link for any issues in your JWT configuration.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

SSL Configuration

If you have added SSL to the OpenMetadata server, then you will need to handle the certificates when running the ingestion too. You can either set verifySSL to ignore, or have it as validate, which will require you to set the sslConfig.caCertificate with a local path where your ingestion runs that points to the server certificate file.

Find more information on how to troubleshoot SSL issues here.

ingestionPipelineFQN

Fully qualified name of ingestion pipeline, used to identify the current ingestion pipeline.

2. Google Cloud Storage Buckets

In this configuration we will be fetching the dbt manifest.json, catalog.json and run_results.json files from a GCS bucket.

Source Config - Type

- dbtConfigType: gcs

credentials: You can authenticate with your GCS instance using either GCP Credentials Path where you can specify the file path of the service account key or you can pass the values directly by choosing the GCP Credentials Values from the service account key file.

You can checkout this documentation on how to create the service account keys and download it.

gcpConfig:

1. Passing the raw credential values provided by GCS. This requires us to provide the following information, all provided by GCS:

- type: Credentials Type is the type of the account, for a service account the value of this field is

service_account. To fetch this key, look for the value associated with thetypekey in the service account key file. - projectId: A project ID is a unique string used to differentiate your project from all others in Google Cloud. To fetch this key, look for the value associated with the

project_idkey in the service account key file. - privateKeyId: This is a unique identifier for the private key associated with the service account. To fetch this key, look for the value associated with the

private_key_idkey in the service account file. - privateKey: This is the private key associated with the service account that is used to authenticate and authorize access to GCS. To fetch this key, look for the value associated with the

private_keykey in the service account file. - clientEmail: This is the email address associated with the service account. To fetch this key, look for the value associated with the

client_emailkey in the service account key file. - clientId: This is a unique identifier for the service account. To fetch this key, look for the value associated with the

client_idkey in the service account key file. - authUri: This is the URI for the authorization server. To fetch this key, look for the value associated with the

auth_urikey in the service account key file. The default value to Auth URI is https://accounts.google.com/o/oauth2/auth. - tokenUri: The Google Cloud Token URI is a specific endpoint used to obtain an OAuth 2.0 access token from the Google Cloud IAM service. This token allows you to authenticate and access various Google Cloud resources and APIs that require authorization. To fetch this key, look for the value associated with the

token_urikey in the service account credentials file. Default Value to Token URI is https://oauth2.googleapis.com/token. - authProviderX509CertUrl: This is the URL of the certificate that verifies the authenticity of the authorization server. To fetch this key, look for the value associated with the

auth_provider_x509_cert_urlkey in the service account key file. The Default value for Auth Provider X509Cert URL is https://www.googleapis.com/oauth2/v1/certs - clientX509CertUrl: This is the URL of the certificate that verifies the authenticity of the service account. To fetch this key, look for the value associated with the

client_x509_cert_urlkey in the service account key file.

2. Passing a local file path that contains the credentials:

gcpCredentialsPath

If you prefer to pass the credentials file, you can do so as follows:

- If you want to use ADC authentication for gcs you can just leave the GCP credentials empty. This is why they are not marked as required.

dbt Prefix Configuration

dbtPrefixConfig: Optional config to specify the bucket name and directory path where the dbt files are stored. If config is not provided ingestion will scan all the buckets for dbt files.

dbtBucketName: Name of the bucket where the dbt files are stored.

dbtObjectPrefix: Path of the folder where the dbt files are stored.

Follow the documentation here to configure multiple dbt projects

Source Config

dbtUpdateDescriptions: Configuration to update the description from dbt or not. If set to true descriptions from dbt will override the already present descriptions on the entity. For more details visit here

dbtUpdateOwners: Configuration to update the owner from dbt or not. If set to true owners from dbt will override the already present owners on the entity. For more details visit here

includeTags: true or false, to ingest tags from dbt. Default is true.

dbtClassificationName: Custom OpenMetadata Classification name for dbt tags.

databaseFilterPattern, schemaFilterPattern, tableFilterPattern: Add filters to filter out models from the dbt manifest. Note that the filter supports regex as include or exclude. You can find examples here

Sink Configuration

To send the metadata to OpenMetadata, it needs to be specified as type: metadata-rest.

Workflow Configuration

The main property here is the openMetadataServerConfig, where you can define the host and security provider of your OpenMetadata installation.

Logger Level

You can specify the loggerLevel depending on your needs. If you are trying to troubleshoot an ingestion, running with DEBUG will give you far more traces for identifying issues.

JWT Token

JWT tokens will allow your clients to authenticate against the OpenMetadata server. To enable JWT Tokens, you will get more details here.

You can refer to the JWT Troubleshooting section link for any issues in your JWT configuration.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

SSL Configuration

If you have added SSL to the OpenMetadata server, then you will need to handle the certificates when running the ingestion too. You can either set verifySSL to ignore, or have it as validate, which will require you to set the sslConfig.caCertificate with a local path where your ingestion runs that points to the server certificate file.

Find more information on how to troubleshoot SSL issues here.

ingestionPipelineFQN

Fully qualified name of ingestion pipeline, used to identify the current ingestion pipeline.

3. Azure Storage Buckets

In this configuration we will be fetching the dbt manifest.json, catalog.json and run_results.json files from a Azure Storage bucket.

Source Config - Type

- dbtConfigType: azure

- Client ID: This is the unique identifier for your application registered in Azure AD. It’s used in conjunction with the Client Secret to authenticate your application.

- Client Secret: A key that your application uses, along with the Client ID, to access Azure resources.

- Log into Microsoft Azure.

- Search for

App registrationsand select theApp registrations link. - Select the

Azure ADapp you're using for this connection. - Under

Manage, selectCertificates & secrets. - Under

Client secrets, selectNew client secret. - In the

Add a client secretpop-up window, provide a description for your application secret. Choose when the application should expire, and selectAdd. - From the

Client secretssection, copy the string in theValuecolumn of the newly created application secret.

- Tenant ID: The unique identifier of the Azure AD instance under which your account and application are registered.

To get the tenant ID, follow these steps:

- Log into Microsoft Azure.

- Search for

App registrationsand select theApp registrations link. - Select the

Azure ADapp you're using for Power BI. - From the

Overviewsection, copy theDirectory (tenant) ID.

- Account Name: The name of your ADLS account.

Here are the step-by-step instructions for finding the account name for an Azure Data Lake Storage account:

- Sign in to the Azure portal and navigate to the

Storage accountspage. - Find the Data Lake Storage account you want to access and click on its name.

- In the account overview page, locate the

Account namefield. This is the unique identifier for the Data Lake Storage account. - You can use this account name to access and manage the resources associated with the account, such as creating and managing containers and directories.

dbt Prefix Configuration

dbtPrefixConfig: Optional config to specify the bucket name and directory path where the dbt files are stored. If config is not provided ingestion will scan all the buckets for dbt files.

dbtBucketName: Name of the bucket where the dbt files are stored.

dbtObjectPrefix: Path of the folder where the dbt files are stored.

Follow the documentation here to configure multiple dbt projects

Source Config

dbtUpdateDescriptions: Configuration to update the description from dbt or not. If set to true descriptions from dbt will override the already present descriptions on the entity. For more details visit here

dbtUpdateOwners: Configuration to update the owner from dbt or not. If set to true owners from dbt will override the already present owners on the entity. For more details visit here

includeTags: true or false, to ingest tags from dbt. Default is true.

dbtClassificationName: Custom OpenMetadata Classification name for dbt tags.

databaseFilterPattern, schemaFilterPattern, tableFilterPattern: Add filters to filter out models from the dbt manifest. Note that the filter supports regex as include or exclude. You can find examples here

Sink Configuration

To send the metadata to OpenMetadata, it needs to be specified as type: metadata-rest.

Workflow Configuration

The main property here is the openMetadataServerConfig, where you can define the host and security provider of your OpenMetadata installation.

Logger Level

You can specify the loggerLevel depending on your needs. If you are trying to troubleshoot an ingestion, running with DEBUG will give you far more traces for identifying issues.

JWT Token

JWT tokens will allow your clients to authenticate against the OpenMetadata server. To enable JWT Tokens, you will get more details here.

You can refer to the JWT Troubleshooting section link for any issues in your JWT configuration.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

SSL Configuration

If you have added SSL to the OpenMetadata server, then you will need to handle the certificates when running the ingestion too. You can either set verifySSL to ignore, or have it as validate, which will require you to set the sslConfig.caCertificate with a local path where your ingestion runs that points to the server certificate file.

Find more information on how to troubleshoot SSL issues here.

ingestionPipelineFQN

Fully qualified name of ingestion pipeline, used to identify the current ingestion pipeline.

4. Local Storage

In this configuration, we will be fetching the dbt manifest.json, catalog.json and run_results.json files from the same host that is running the ingestion process.

Source Config - Type

- dbtConfigType: local

- dbtCatalogFilePath: catalog.json file path to extract dbt models with their column schemas.

- dbtManifestFilePath (

Required): manifest.json file path to extract dbt models with their column schemas.

- dbtRunResultsFilePath: run_results.json file path to extract dbt models tests and test results metadata. Tests from dbt will only be ingested if this file is present.

Source Config

dbtUpdateDescriptions: Configuration to update the description from dbt or not. If set to true descriptions from dbt will override the already present descriptions on the entity. For more details visit here

dbtUpdateOwners: Configuration to update the owner from dbt or not. If set to true owners from dbt will override the already present owners on the entity. For more details visit here

includeTags: true or false, to ingest tags from dbt. Default is true.

dbtClassificationName: Custom OpenMetadata Classification name for dbt tags.

databaseFilterPattern, schemaFilterPattern, tableFilterPattern: Add filters to filter out models from the dbt manifest. Note that the filter supports regex as include or exclude. You can find examples here

Sink Configuration

To send the metadata to OpenMetadata, it needs to be specified as type: metadata-rest.

Workflow Configuration

The main property here is the openMetadataServerConfig, where you can define the host and security provider of your OpenMetadata installation.

Logger Level

You can specify the loggerLevel depending on your needs. If you are trying to troubleshoot an ingestion, running with DEBUG will give you far more traces for identifying issues.

JWT Token

JWT tokens will allow your clients to authenticate against the OpenMetadata server. To enable JWT Tokens, you will get more details here.

You can refer to the JWT Troubleshooting section link for any issues in your JWT configuration.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

SSL Configuration

If you have added SSL to the OpenMetadata server, then you will need to handle the certificates when running the ingestion too. You can either set verifySSL to ignore, or have it as validate, which will require you to set the sslConfig.caCertificate with a local path where your ingestion runs that points to the server certificate file.

Find more information on how to troubleshoot SSL issues here.

ingestionPipelineFQN

Fully qualified name of ingestion pipeline, used to identify the current ingestion pipeline.

5. File Server

In this configuration we will be fetching the dbt manifest.json, catalog.json and run_results.json files from an HTTP or File Server.

Source Config - Type

- dbtConfigType: http

- dbtCatalogHttpPath: catalog.json http path to extract dbt models with their column schemas.

- dbtManifestHttpPath (

Required): manifest.json http path to extract dbt models with their column schemas.

- dbtRunResultsHttpPath: run_results.json http path to extract dbt models tests and test results metadata. Tests from dbt will only be ingested if this file is present.

Source Config

dbtUpdateDescriptions: Configuration to update the description from dbt or not. If set to true descriptions from dbt will override the already present descriptions on the entity. For more details visit here

dbtUpdateOwners: Configuration to update the owner from dbt or not. If set to true owners from dbt will override the already present owners on the entity. For more details visit here

includeTags: true or false, to ingest tags from dbt. Default is true.

dbtClassificationName: Custom OpenMetadata Classification name for dbt tags.

databaseFilterPattern, schemaFilterPattern, tableFilterPattern: Add filters to filter out models from the dbt manifest. Note that the filter supports regex as include or exclude. You can find examples here

Sink Configuration

To send the metadata to OpenMetadata, it needs to be specified as type: metadata-rest.

Workflow Configuration

The main property here is the openMetadataServerConfig, where you can define the host and security provider of your OpenMetadata installation.

Logger Level

You can specify the loggerLevel depending on your needs. If you are trying to troubleshoot an ingestion, running with DEBUG will give you far more traces for identifying issues.

JWT Token

JWT tokens will allow your clients to authenticate against the OpenMetadata server. To enable JWT Tokens, you will get more details here.

You can refer to the JWT Troubleshooting section link for any issues in your JWT configuration.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

SSL Configuration

If you have added SSL to the OpenMetadata server, then you will need to handle the certificates when running the ingestion too. You can either set verifySSL to ignore, or have it as validate, which will require you to set the sslConfig.caCertificate with a local path where your ingestion runs that points to the server certificate file.

Find more information on how to troubleshoot SSL issues here.

ingestionPipelineFQN

Fully qualified name of ingestion pipeline, used to identify the current ingestion pipeline.

6. dbt Cloud

In this configuration we will be fetching the dbt manifest.json, catalog.json and run_results.json files from dbt cloud APIs.

The Account Viewer permission is the minimum requirement for the dbt cloud token.

The dbt Cloud workflow leverages the dbt Cloud v2 APIs to retrieve dbt run artifacts (manifest.json, catalog.json, and run_results.json) and ingest the dbt metadata.

It uses the /runs API to obtain the most recent successful dbt run, filtering by account_id, project_id and job_id if specified. The artifacts from this run are then collected using the /artifacts API.

Refer to the code here

Source Config - Type

- dbtConfigType: cloud

- dbtCloudAuthToken: Please follow the instructions in dbt Cloud's API documentation to create a dbt Cloud authentication token. The

Account Viewerpermission is the minimum requirement for the dbt cloud token.

- dbtCloudAccountId (

Required): To obtain your dbt Cloud account ID, sign in to dbt Cloud in your browser. Take note of the number directly following the accounts path component of the URL -- this is your account ID.

For example, if the URL is https://cloud.getdbt.com/#/accounts/1234/projects/6789/dashboard/, the account ID is 1234.

- dbtCloudJobId: In case of multiple jobs in a dbt cloud account, specify the job's ID from which you want to extract the dbt run artifacts. If left empty, the dbt artifacts will be fetched from the most recent run on dbt cloud.

After creating a dbt job, take note of the url which will be similar to https://cloud.getdbt.com/#/accounts/1234/projects/6789/jobs/553344/. The job ID is 553344.

The value entered should be a numeric value.

- dbtCloudProjectId: In case of multiple projects in a dbt cloud account, specify the project's ID from which you want to extract the dbt run artifacts. If left empty, the dbt artifacts will be fetched from the most recent run on dbt cloud.

To find your project ID, sign in to your dbt cloud account and choose a specific project. Take note of the url which will be similar to https://cloud.getdbt.com/#/accounts/1234/settings/projects/6789/, the project ID is 6789.

The value entered should be a numeric value.

- dbtCloudUrl: URL to connect to your dbt cloud instance. E.g.,

https://cloud.getdbt.comorhttps://emea.dbt.com/.

Source Config

dbtUpdateDescriptions: Configuration to update the description from dbt or not. If set to true descriptions from dbt will override the already present descriptions on the entity. For more details visit here

dbtUpdateOwners: Configuration to update the owner from dbt or not. If set to true owners from dbt will override the already present owners on the entity. For more details visit here

includeTags: true or false, to ingest tags from dbt. Default is true.

dbtClassificationName: Custom OpenMetadata Classification name for dbt tags.

databaseFilterPattern, schemaFilterPattern, tableFilterPattern: Add filters to filter out models from the dbt manifest. Note that the filter supports regex as include or exclude. You can find examples here

Sink Configuration

To send the metadata to OpenMetadata, it needs to be specified as type: metadata-rest.

Workflow Configuration

The main property here is the openMetadataServerConfig, where you can define the host and security provider of your OpenMetadata installation.

Logger Level

You can specify the loggerLevel depending on your needs. If you are trying to troubleshoot an ingestion, running with DEBUG will give you far more traces for identifying issues.

JWT Token

JWT tokens will allow your clients to authenticate against the OpenMetadata server. To enable JWT Tokens, you will get more details here.

You can refer to the JWT Troubleshooting section link for any issues in your JWT configuration.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

Store Service Connection

If set to true (default), we will store the sensitive information either encrypted via the Fernet Key in the database or externally, if you have configured any Secrets Manager.

If set to false, the service will be created, but the service connection information will only be used by the Ingestion Framework at runtime, and won't be sent to the OpenMetadata server.

SSL Configuration

If you have added SSL to the OpenMetadata server, then you will need to handle the certificates when running the ingestion too. You can either set verifySSL to ignore, or have it as validate, which will require you to set the sslConfig.caCertificate with a local path where your ingestion runs that points to the server certificate file.

Find more information on how to troubleshoot SSL issues here.

ingestionPipelineFQN

Fully qualified name of ingestion pipeline, used to identify the current ingestion pipeline.

2. Run the dbt ingestion

After saving the YAML config, we will run the command for dbt ingestion